www.tensorflow.org/tutorials/generative/cvae?hl=ko

컨볼루셔널 변이형 오토인코더 | TensorFlow Core

이 노트북은 MNIST 데이터세트에서 변이형 오토인코더(VAE, Variational Autoencoder)를 훈련하는 방법을 보여줍니다(1 , 2). VAE는 오토인코더의 확률론적 형태로, 높은 차원의 입력 데이터를 더 작은 표현

www.tensorflow.org

Data load

from IPython import display

import glob

import imageio

import matplotlib.pyplot as plt

import numpy as np

import PIL

import tensorflow as tf

import tensorflow_probability as tfp

import time

(train_images, _),(test_images, _) = tf.keras.datasets.mnist.load_data()

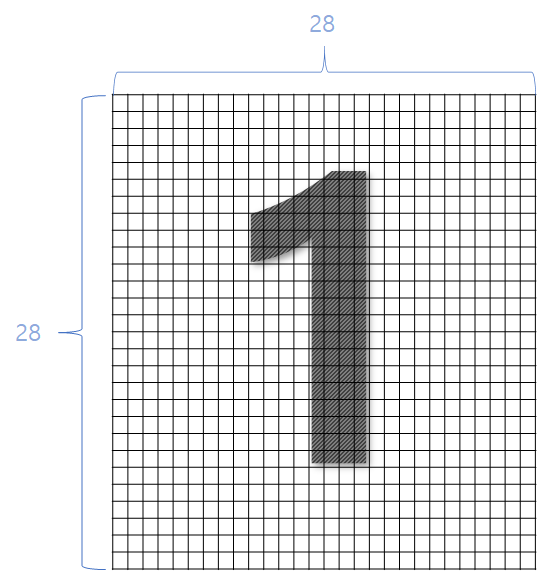

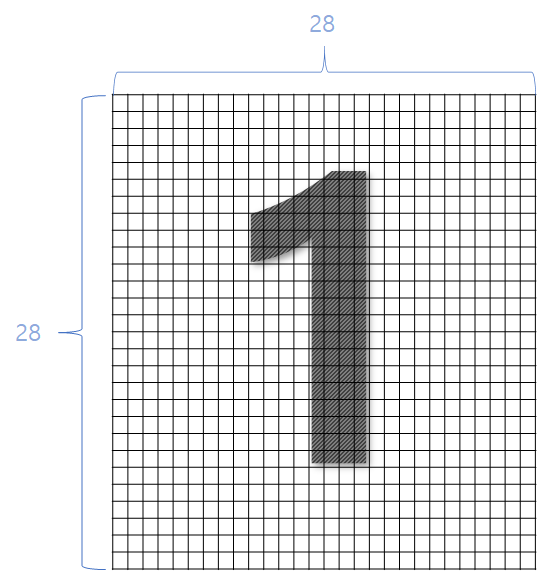

[0, 0, 0, 0, 0, 0, 0, 49, 238, 253, 253, 253, 253,253, 253, 253, 253, 251, 93, 82, 82, 56, 39, 0, 0, 0, 0, 0] ..... X 28

0~255 사이의 수로 픽셀의 강도를 나타낸다.

train_images counts : 60000

test_images counts : 10000

def preprocess_images(images):

images = images.reshape((images.shape[0],28,28,1)) / 255.

return np.where(images > .5, 1.0, 0.0).astype('float32')

train_images = preprocess_images(train_images)

test_images = preprocess_images(test_images)preprocess_images는 픽셀의 강도 0~255의 수를 0~1로 전처리시키고 0.5 초과는 1로 이하는 0으로 만들고 float32로 변형한다.

train_dataset = (tf.data.Dataset.from_tensor_slices(train_images).shuffle(train_size).batch(batch_size))

test_datasets = (tf.data.Dataset.from_tensor_slices(test_images).shuffle(test_size).batch(batch_size))

tf.data.Dataset을 이용해 데이터 배치와 셔플하기

ConvNet을 이용한, VAE CVAE model

class CVAE(tf.keras.Model):

"""Convolutional variational autoencoder"""

def __init__(self,latent_dim):

super(CVAE,self).__init__()

self.latent_dim = latent_dimCVAE 모델

latent_dim : generator에 사용할 input node의 수

self.encoder = tf.keras.Sequential(

[

tf.keras.layers.InputLayer(input_shape=(28,28,1)),

tf.keras.layers.Conv2D(filters=32,kernel_size=3,strides=(2,2),activation='relu'),

tf.keras.layers.Conv2D(filters=64,kernel_size=3,strides=(2,2),activation='relu'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(latent_dim+latent_dim),

]

),encoder

Tensorflow 예제에서 구현을 단순화 하기 위해 Sequential을 사용했다.

InputLayer: image_dim (28,28,1)

Conv2D:

filters: 몇 개의 다른 종류의 필터를 사용할지, 출력 모양의 depth를 정함

kernel_size: 연산을 수행할 때 윈도우의 크기

strides: 윈도우가 움직일 간격

activation: 연산 종류 'relu'

depth: 32 -> 64

Flatten: 배열 flat으로 ___________

Dense: 출력 뉴런 수 latent_dim + latent_dim

self.decoder = tf.keras.Sequential(

[

tf.keras.layers.InputLayer(input_shape=(latent_dim,)),

tf.keras.layers.Dense(units=7*7*32,activation=tf.nn.relu),

tf.keras.layers.Reshape(target_shape=(7,7,32)),

tf.keras.layers.Conv2DTranspose(filters=64,kernel_size=3,strides=2,padding='same'),

tf.keras.layers.Conv2DTranspose(filters=32,kernel_size=3,strides=2,padding='same'),

tf.keras.layers.Conv2DTranspose(filters=1,kernel_size=3,strides=1,padding='same')

]

)

decoder

InputLayer: latent_dim 현재 input node의 수

Dense: units 출력 수 7*7*32, 연산 'relu' 출력 뉴런수

Reshape: 7*7*32->(7,7,32)

Conv2DTranspose:

filters: 몇 개의 다른 종류의 필터를 사용할지, 출력 모양의 depth를 정함

kernel_size: 연산을 수행할 때 윈도우의 크기

strides: 윈도우가 움직일 간격

padding: 'same' 출력 크기 동일

Conv2DTranspose로 복원

depth: 64 - > 32 -> 1

def sample(self,eps=None):

if eps is None:

eps = tf.random.normal(shape=(100,self.latent_dim))

return self.decode(eps,apply_sigmoid=True)$z = \mu + \sigma \odot \epsilon$

sample 함수는 epsepson를 통해 인코더의 그래디언트를 역전파하면서 무질서도를 유지할 수 있다.

def encode(self,x):

mean, logvar = tf.split(self.encoder(x),num_or_size_splits=2,axis=1)

return mean, logvarencode

num_or_size_splits = 2 : integer, then value is split along the dimension axis into num_or_size_splits smaller tensors.

더 작은텐서로나눈다.

mean = $\mu$

logva = $\sigma$

def reparameterize(self,mean,logvar):

eps = tf.random.normal(shape=mean.shape)reparameterize 무작위 샘플링

def decode(self,z,apply_sigmoid=False):

logits = self.decoder(z)

if apply_sigmoid:

probs = tf.sigmoid(logits)

return probs

return logitsdecode 랜덤 샘플링 sigmoid -> logits

optimizer = tf.keras.optimizers.Adam(1e-4)

def log_normal_pdf(sample, mean, logvar, raxis=1):

log2pi = tf.math.log(2.*np.pi)

return tf.reduce_sum(

-.5 * ((sample-mean)**2.*tf.exp(-logvar)+logvar+log2pi),

axis=raxis)

def compute_loss(model,x):

mean, logvar = model.encode(x)

z = model.reparameterize(mean, logvar)

x_logit = model.decode(z)

cross_ent = tf.nn.sigmoid_cross_entropy_with_logits(logits=x_logit,labels=x)

logpx_z = -tf.reduce_sum(cross_ent,axis=[1,2,3])

logpz = log_normal_pdf(z,0.,0.)

logqz_x = log_normal_pdf(z,mean,logvar)

return -tf.reduce_mean(logpx_z+logpz-logqz_x)

def train_step(model,x,optimizer):

with tf.GradientTape() as tape:

loss = compute_loss(model,x)

gradients = tape.gradient(loss,model.trainable_variables)

optimizer.apply_gradients(zip(gradients,model.trainable_variables))optimizer 선언

log_normal_pdf

$E_{rec} = (-x_{ij}\log{y_{ij}}-(1-x_{ij})\log{(1-y_{ij}}))$

compute_loss

loss 계산

train_step

학습

epochs = 10

latent_dim = 2

num_examples_to_generate = 16random_vector_for_generation = tf.random.normal(

shape=[num_examples_to_generate,latent_dim])

model = CVAE(latent_dim)random_vector_for_generation

(16,2) random dim 생성

model

CVAE 모델 선언

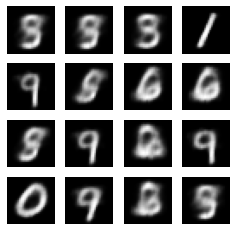

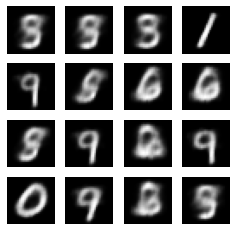

def generate_and_save_images(model, epoch, test_sample):

mean, logvar = model.encode(test_sample)

z = model.reparameterize(mean,logvar)

predictions = model.sample(z)

fig = plt.figure(figsize=(4,4))

for i in range(predictions.shape[0]):

plt.subplot(4,4,i+1)

plt.imshow(predictions[i,:,:,0],cmap='gray')

plt.axis('off')

plt.savefig('image_at_epoch_{:04d}.png'.format(epoch))

plt.show()generate_and_save_images

이미지 저장 및 생성

assert batch_size >= num_examples_to_generate

for test_batch in test_datasets.take(1):

test_sample = test_batch[0:num_examples_to_generate,:,:,:] # TensorShape([16, 28, 28, 1])

assert 프로그램 내부의 진단

batch_size(32)가 num_examples_to_genrate(16) 보다 큰 것을 확인(작을 경우 에러)

generate_and_save_images(model, 0, test_sample)

for epoch in range(1, epochs + 1):

start_time = time.time()

for train_x in train_dataset:

train_step(model, train_x, optimizer)

end_time = time.time()

loss = tf.keras.metrics.Mean()

for test_x in test_dataset:

loss(compute_loss(model, test_x))

elbo = -loss.result()

display.clear_output(wait=False)

print('Epoch: {}, Test set ELBO: {}, time elapse for current epoch: {}'

.format(epoch, elbo, end_time - start_time))

generate_and_save_images(model, epoch, test_sample)생성 후 학습 # Epoch: 10, Test set ELBO: -156.81210327148438, time elapse for current epoch: 4.33378791809082

def display_image(epoch_no):

return PIL.Image.open('image_at_epoch_{:04d}.png'.format(epoch_no))

plt.imshow(display_image(epoch))

plt.axis('off') anim_file = 'cvae.gif'

with imageio.get_writer(anim_file, mode='I') as writer:

filenames = glob.glob('image*.png')

filenames = sorted(filenames)

for filename in filenames:

image = imageio.imread(filename)

writer.append_data(image)

image = imageio.imread(filename)

writer.append_data(image)

import tensorflow_docs.vis.embed as embed

embed.embed_file(anim_file)

www.tensorflow.org/tutorials/generative/cvae?hl=ko 출처

컨볼루셔널 변이형 오토인코더 | TensorFlow Core

이 노트북은 MNIST 데이터세트에서 변이형 오토인코더(VAE, Variational Autoencoder)를 훈련하는 방법을 보여줍니다(1 , 2). VAE는 오토인코더의 확률론적 형태로, 높은 차원의 입력 데이터를 더 작은 표현

www.tensorflow.org

'👾 Deep Learning' 카테고리의 다른 글

| [Transformer] Position-wise Feed-Forward Networks (2) (0) | 2021.02.20 |

|---|---|

| [Transformer] Self-Attension 셀프 어텐션 (0) (0) | 2021.02.19 |

| Tensorflow Initializer 초기화 종류 (0) | 2021.02.18 |

| VAE(Variational Autoencoder) (2) (0) | 2021.02.18 |

| VAE(Variational Autoencoder) (1) (0) | 2021.02.18 |

www.tensorflow.org/tutorials/generative/cvae?hl=ko

컨볼루셔널 변이형 오토인코더 | TensorFlow Core

이 노트북은 MNIST 데이터세트에서 변이형 오토인코더(VAE, Variational Autoencoder)를 훈련하는 방법을 보여줍니다(1 , 2). VAE는 오토인코더의 확률론적 형태로, 높은 차원의 입력 데이터를 더 작은 표현

www.tensorflow.org

Data load

from IPython import display

import glob

import imageio

import matplotlib.pyplot as plt

import numpy as np

import PIL

import tensorflow as tf

import tensorflow_probability as tfp

import time

(train_images, _),(test_images, _) = tf.keras.datasets.mnist.load_data()

[0, 0, 0, 0, 0, 0, 0, 49, 238, 253, 253, 253, 253,253, 253, 253, 253, 251, 93, 82, 82, 56, 39, 0, 0, 0, 0, 0] ..... X 28

0~255 사이의 수로 픽셀의 강도를 나타낸다.

train_images counts : 60000

test_images counts : 10000

def preprocess_images(images):

images = images.reshape((images.shape[0],28,28,1)) / 255.

return np.where(images > .5, 1.0, 0.0).astype('float32')

train_images = preprocess_images(train_images)

test_images = preprocess_images(test_images)preprocess_images는 픽셀의 강도 0~255의 수를 0~1로 전처리시키고 0.5 초과는 1로 이하는 0으로 만들고 float32로 변형한다.

train_dataset = (tf.data.Dataset.from_tensor_slices(train_images).shuffle(train_size).batch(batch_size))

test_datasets = (tf.data.Dataset.from_tensor_slices(test_images).shuffle(test_size).batch(batch_size))

tf.data.Dataset을 이용해 데이터 배치와 셔플하기

ConvNet을 이용한, VAE CVAE model

class CVAE(tf.keras.Model):

"""Convolutional variational autoencoder"""

def __init__(self,latent_dim):

super(CVAE,self).__init__()

self.latent_dim = latent_dimCVAE 모델

latent_dim : generator에 사용할 input node의 수

self.encoder = tf.keras.Sequential(

[

tf.keras.layers.InputLayer(input_shape=(28,28,1)),

tf.keras.layers.Conv2D(filters=32,kernel_size=3,strides=(2,2),activation='relu'),

tf.keras.layers.Conv2D(filters=64,kernel_size=3,strides=(2,2),activation='relu'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(latent_dim+latent_dim),

]

),encoder

Tensorflow 예제에서 구현을 단순화 하기 위해 Sequential을 사용했다.

InputLayer: image_dim (28,28,1)

Conv2D:

filters: 몇 개의 다른 종류의 필터를 사용할지, 출력 모양의 depth를 정함

kernel_size: 연산을 수행할 때 윈도우의 크기

strides: 윈도우가 움직일 간격

activation: 연산 종류 'relu'

depth: 32 -> 64

Flatten: 배열 flat으로 ___________

Dense: 출력 뉴런 수 latent_dim + latent_dim

self.decoder = tf.keras.Sequential(

[

tf.keras.layers.InputLayer(input_shape=(latent_dim,)),

tf.keras.layers.Dense(units=7*7*32,activation=tf.nn.relu),

tf.keras.layers.Reshape(target_shape=(7,7,32)),

tf.keras.layers.Conv2DTranspose(filters=64,kernel_size=3,strides=2,padding='same'),

tf.keras.layers.Conv2DTranspose(filters=32,kernel_size=3,strides=2,padding='same'),

tf.keras.layers.Conv2DTranspose(filters=1,kernel_size=3,strides=1,padding='same')

]

)

decoder

InputLayer: latent_dim 현재 input node의 수

Dense: units 출력 수 7*7*32, 연산 'relu' 출력 뉴런수

Reshape: 7*7*32->(7,7,32)

Conv2DTranspose:

filters: 몇 개의 다른 종류의 필터를 사용할지, 출력 모양의 depth를 정함

kernel_size: 연산을 수행할 때 윈도우의 크기

strides: 윈도우가 움직일 간격

padding: 'same' 출력 크기 동일

Conv2DTranspose로 복원

depth: 64 - > 32 -> 1

def sample(self,eps=None):

if eps is None:

eps = tf.random.normal(shape=(100,self.latent_dim))

return self.decode(eps,apply_sigmoid=True)z=μ+σ⊙ϵ

sample 함수는 epsepson를 통해 인코더의 그래디언트를 역전파하면서 무질서도를 유지할 수 있다.

def encode(self,x):

mean, logvar = tf.split(self.encoder(x),num_or_size_splits=2,axis=1)

return mean, logvarencode

num_or_size_splits = 2 : integer, then value is split along the dimension axis into num_or_size_splits smaller tensors.

더 작은텐서로나눈다.

mean = μ

logva = σ

def reparameterize(self,mean,logvar):

eps = tf.random.normal(shape=mean.shape)reparameterize 무작위 샘플링

def decode(self,z,apply_sigmoid=False):

logits = self.decoder(z)

if apply_sigmoid:

probs = tf.sigmoid(logits)

return probs

return logitsdecode 랜덤 샘플링 sigmoid -> logits

optimizer = tf.keras.optimizers.Adam(1e-4)

def log_normal_pdf(sample, mean, logvar, raxis=1):

log2pi = tf.math.log(2.*np.pi)

return tf.reduce_sum(

-.5 * ((sample-mean)**2.*tf.exp(-logvar)+logvar+log2pi),

axis=raxis)

def compute_loss(model,x):

mean, logvar = model.encode(x)

z = model.reparameterize(mean, logvar)

x_logit = model.decode(z)

cross_ent = tf.nn.sigmoid_cross_entropy_with_logits(logits=x_logit,labels=x)

logpx_z = -tf.reduce_sum(cross_ent,axis=[1,2,3])

logpz = log_normal_pdf(z,0.,0.)

logqz_x = log_normal_pdf(z,mean,logvar)

return -tf.reduce_mean(logpx_z+logpz-logqz_x)

def train_step(model,x,optimizer):

with tf.GradientTape() as tape:

loss = compute_loss(model,x)

gradients = tape.gradient(loss,model.trainable_variables)

optimizer.apply_gradients(zip(gradients,model.trainable_variables))optimizer 선언

log_normal_pdf

Erec=(−xijlogyij−(1−xij)log(1−yij))

compute_loss

loss 계산

train_step

학습

epochs = 10

latent_dim = 2

num_examples_to_generate = 16random_vector_for_generation = tf.random.normal(

shape=[num_examples_to_generate,latent_dim])

model = CVAE(latent_dim)random_vector_for_generation

(16,2) random dim 생성

model

CVAE 모델 선언

def generate_and_save_images(model, epoch, test_sample):

mean, logvar = model.encode(test_sample)

z = model.reparameterize(mean,logvar)

predictions = model.sample(z)

fig = plt.figure(figsize=(4,4))

for i in range(predictions.shape[0]):

plt.subplot(4,4,i+1)

plt.imshow(predictions[i,:,:,0],cmap='gray')

plt.axis('off')

plt.savefig('image_at_epoch_{:04d}.png'.format(epoch))

plt.show()generate_and_save_images

이미지 저장 및 생성

assert batch_size >= num_examples_to_generate

for test_batch in test_datasets.take(1):

test_sample = test_batch[0:num_examples_to_generate,:,:,:] # TensorShape([16, 28, 28, 1])

assert 프로그램 내부의 진단

batch_size(32)가 num_examples_to_genrate(16) 보다 큰 것을 확인(작을 경우 에러)

generate_and_save_images(model, 0, test_sample)

for epoch in range(1, epochs + 1):

start_time = time.time()

for train_x in train_dataset:

train_step(model, train_x, optimizer)

end_time = time.time()

loss = tf.keras.metrics.Mean()

for test_x in test_dataset:

loss(compute_loss(model, test_x))

elbo = -loss.result()

display.clear_output(wait=False)

print('Epoch: {}, Test set ELBO: {}, time elapse for current epoch: {}'

.format(epoch, elbo, end_time - start_time))

generate_and_save_images(model, epoch, test_sample)생성 후 학습 # Epoch: 10, Test set ELBO: -156.81210327148438, time elapse for current epoch: 4.33378791809082

def display_image(epoch_no):

return PIL.Image.open('image_at_epoch_{:04d}.png'.format(epoch_no))

plt.imshow(display_image(epoch))

plt.axis('off') anim_file = 'cvae.gif'

with imageio.get_writer(anim_file, mode='I') as writer:

filenames = glob.glob('image*.png')

filenames = sorted(filenames)

for filename in filenames:

image = imageio.imread(filename)

writer.append_data(image)

image = imageio.imread(filename)

writer.append_data(image)

import tensorflow_docs.vis.embed as embed

embed.embed_file(anim_file)

www.tensorflow.org/tutorials/generative/cvae?hl=ko 출처

컨볼루셔널 변이형 오토인코더 | TensorFlow Core

이 노트북은 MNIST 데이터세트에서 변이형 오토인코더(VAE, Variational Autoencoder)를 훈련하는 방법을 보여줍니다(1 , 2). VAE는 오토인코더의 확률론적 형태로, 높은 차원의 입력 데이터를 더 작은 표현

www.tensorflow.org

'👾 Deep Learning' 카테고리의 다른 글

| [Transformer] Position-wise Feed-Forward Networks (2) (0) | 2021.02.20 |

|---|---|

| [Transformer] Self-Attension 셀프 어텐션 (0) (0) | 2021.02.19 |

| Tensorflow Initializer 초기화 종류 (0) | 2021.02.18 |

| VAE(Variational Autoencoder) (2) (0) | 2021.02.18 |

| VAE(Variational Autoencoder) (1) (0) | 2021.02.18 |