https://github.com/ggerganov/whisper.cpp

GitHub - ggerganov/whisper.cpp: Port of OpenAI's Whisper model in C/C++

Port of OpenAI's Whisper model in C/C++. Contribute to ggerganov/whisper.cpp development by creating an account on GitHub.

github.com

M1 Install

1 . git clone으로 최신 버전으로 설치할 경우 M1에서 .o architecture error 발생으로 [stable version]을 다운로드 한다.

https://github.com/ggerganov/whisper.cpp/releases/tag/v1.2.1

Release v1.2.1 · ggerganov/whisper.cpp

Overview This is a minor release. The main reason for it is a critical bug fix that causes the software to crash randomly when the language auto-detect option is used (i.e. whisper_lang_auto_detect...

github.com

2. 다운로드한 폴더를 tar.gz 파일 압축 해제

tar -xvf whisper.cpp-1.2.1.tar.gz

3. 폴더 이동

cd whisper.cpp-1.2.1

4. Whisper cpp version으로 변환된 모델 다운로드 [https://github.com/ggerganov/whisper.cpp/tree/master/models]

bash ./models/download-ggml-model.sh base.en # 기본 Base model 다운로드 예시

# bash ./models/download-ggml-model.sh [model size ex) large]

5. Build Makefile

# build the main example

make

# transcribe an audio file

./main -f samples/jfk.wav

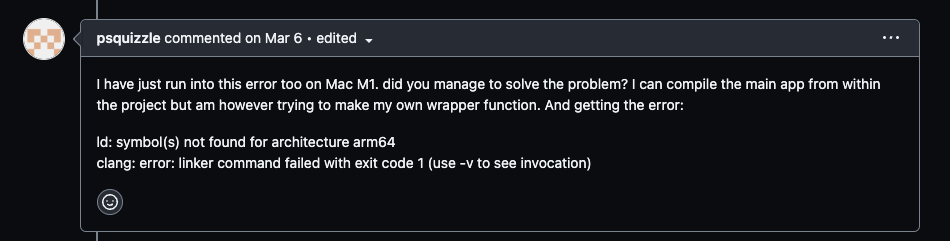

m1에서 make 시 clang 에러를 마주하게 된다. (https://github.com/ggerganov/whisper.cpp/issues/570)

cc -I. -O3 -DNDEBUG -std=c11 -fPIC -pthread -DGGML_USE_ACCELERATE -c ggml.c -o ggml.o

c++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread -c whisper.cpp -o whisper.o

c++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread examples/main/main.cpp examples/common.cpp ggml.o whisper.o -o main -framework Accelerate

# main file make complete

6. Test File 변환

ffmpeg -i input.mp3 -ar 16000 -ac 1 -c:a pcm_s16le output.wav # ffmpeg로 높은 hz 파일 변환

7. Test

# ,/main -m ./models/ggml-[model size].bin -f [file].wav -ml

# model.en.bin -> en model 사용

# -ml = --max-len

# -l language ko -> translation 사용

./main -m ./models/ggml-base.en.bin -f ./samples/jfk.wav -ml 16

8. Result

system_info: n_threads = 4 / 8 | AVX = 0 | AVX2 = 0 | AVX512 = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 0 | VSX = 0 |

main: processing './samples/jfk.wav' (176000 samples, 11.0 sec), 4 threads, 1 processors, lang = en, task = transcribe, timestamps = 1 ...

[00:00:00.000 --> 00:00:00.850] And so my

[00:00:00.850 --> 00:00:01.590] fellow

[00:00:01.590 --> 00:00:04.140] Americans, ask

[00:00:04.140 --> 00:00:05.660] not what your

[00:00:05.660 --> 00:00:06.840] country can do

[00:00:06.840 --> 00:00:08.430] for you, ask

[00:00:08.430 --> 00:00:09.440] what you can do

[00:00:09.440 --> 00:00:10.020] for your

[00:00:10.020 --> 00:00:11.000] country.

whisper_print_timings: fallbacks = 0 p / 0 h

whisper_print_timings: load time = 106.06 ms

whisper_print_timings: mel time = 15.37 ms

whisper_print_timings: sample time = 11.49 ms / 27 runs ( 0.43 ms per run)

whisper_print_timings: encode time = 246.60 ms / 1 runs ( 246.60 ms per run)

whisper_print_timings: decode time = 63.65 ms / 27 runs ( 2.36 ms per run)

whisper_print_timings: total time = 455.18 ms

(base) @M1 whisper.cpp-1.2.1 %'👾 Deep Learning' 카테고리의 다른 글

| Choose Your Weapon:Survival Strategies for Depressed AI Academics (0) | 2023.04.18 |

|---|---|

| [RL] Soft Actor-Critic (a.k.a SAC) (0) | 2023.04.12 |

| [RL] Deep Deterministic Policy Gradient (A.K.A DDPG) (0) | 2023.04.04 |

| [RL] M1 Mac Mujoco_py 설치 (gcc@9 error) (0) | 2023.03.29 |

| [RL] A3C (비동기 Advantage Actor-Critic) 정리 (0) | 2023.03.28 |

https://github.com/ggerganov/whisper.cpp

GitHub - ggerganov/whisper.cpp: Port of OpenAI's Whisper model in C/C++

Port of OpenAI's Whisper model in C/C++. Contribute to ggerganov/whisper.cpp development by creating an account on GitHub.

github.com

M1 Install

1 . git clone으로 최신 버전으로 설치할 경우 M1에서 .o architecture error 발생으로 [stable version]을 다운로드 한다.

https://github.com/ggerganov/whisper.cpp/releases/tag/v1.2.1

Release v1.2.1 · ggerganov/whisper.cpp

Overview This is a minor release. The main reason for it is a critical bug fix that causes the software to crash randomly when the language auto-detect option is used (i.e. whisper_lang_auto_detect...

github.com

2. 다운로드한 폴더를 tar.gz 파일 압축 해제

tar -xvf whisper.cpp-1.2.1.tar.gz

3. 폴더 이동

cd whisper.cpp-1.2.1

4. Whisper cpp version으로 변환된 모델 다운로드 [https://github.com/ggerganov/whisper.cpp/tree/master/models]

bash ./models/download-ggml-model.sh base.en # 기본 Base model 다운로드 예시

# bash ./models/download-ggml-model.sh [model size ex) large]

5. Build Makefile

# build the main example

make

# transcribe an audio file

./main -f samples/jfk.wav

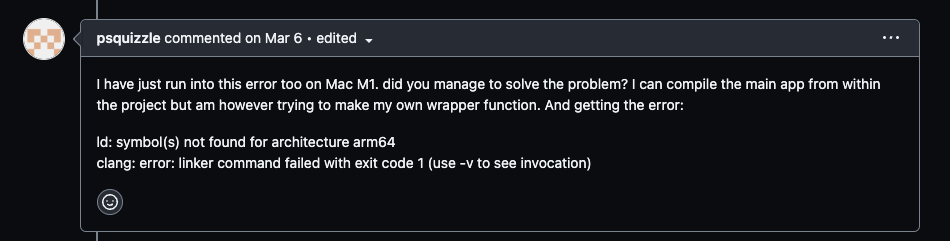

m1에서 make 시 clang 에러를 마주하게 된다. (https://github.com/ggerganov/whisper.cpp/issues/570)

cc -I. -O3 -DNDEBUG -std=c11 -fPIC -pthread -DGGML_USE_ACCELERATE -c ggml.c -o ggml.o

c++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread -c whisper.cpp -o whisper.o

c++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread examples/main/main.cpp examples/common.cpp ggml.o whisper.o -o main -framework Accelerate

# main file make complete

6. Test File 변환

ffmpeg -i input.mp3 -ar 16000 -ac 1 -c:a pcm_s16le output.wav # ffmpeg로 높은 hz 파일 변환

7. Test

# ,/main -m ./models/ggml-[model size].bin -f [file].wav -ml

# model.en.bin -> en model 사용

# -ml = --max-len

# -l language ko -> translation 사용

./main -m ./models/ggml-base.en.bin -f ./samples/jfk.wav -ml 16

8. Result

system_info: n_threads = 4 / 8 | AVX = 0 | AVX2 = 0 | AVX512 = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 0 | VSX = 0 |

main: processing './samples/jfk.wav' (176000 samples, 11.0 sec), 4 threads, 1 processors, lang = en, task = transcribe, timestamps = 1 ...

[00:00:00.000 --> 00:00:00.850] And so my

[00:00:00.850 --> 00:00:01.590] fellow

[00:00:01.590 --> 00:00:04.140] Americans, ask

[00:00:04.140 --> 00:00:05.660] not what your

[00:00:05.660 --> 00:00:06.840] country can do

[00:00:06.840 --> 00:00:08.430] for you, ask

[00:00:08.430 --> 00:00:09.440] what you can do

[00:00:09.440 --> 00:00:10.020] for your

[00:00:10.020 --> 00:00:11.000] country.

whisper_print_timings: fallbacks = 0 p / 0 h

whisper_print_timings: load time = 106.06 ms

whisper_print_timings: mel time = 15.37 ms

whisper_print_timings: sample time = 11.49 ms / 27 runs ( 0.43 ms per run)

whisper_print_timings: encode time = 246.60 ms / 1 runs ( 246.60 ms per run)

whisper_print_timings: decode time = 63.65 ms / 27 runs ( 2.36 ms per run)

whisper_print_timings: total time = 455.18 ms

(base) @M1 whisper.cpp-1.2.1 %'👾 Deep Learning' 카테고리의 다른 글

| Choose Your Weapon:Survival Strategies for Depressed AI Academics (0) | 2023.04.18 |

|---|---|

| [RL] Soft Actor-Critic (a.k.a SAC) (0) | 2023.04.12 |

| [RL] Deep Deterministic Policy Gradient (A.K.A DDPG) (0) | 2023.04.04 |

| [RL] M1 Mac Mujoco_py 설치 (gcc@9 error) (0) | 2023.03.29 |

| [RL] A3C (비동기 Advantage Actor-Critic) 정리 (0) | 2023.03.28 |